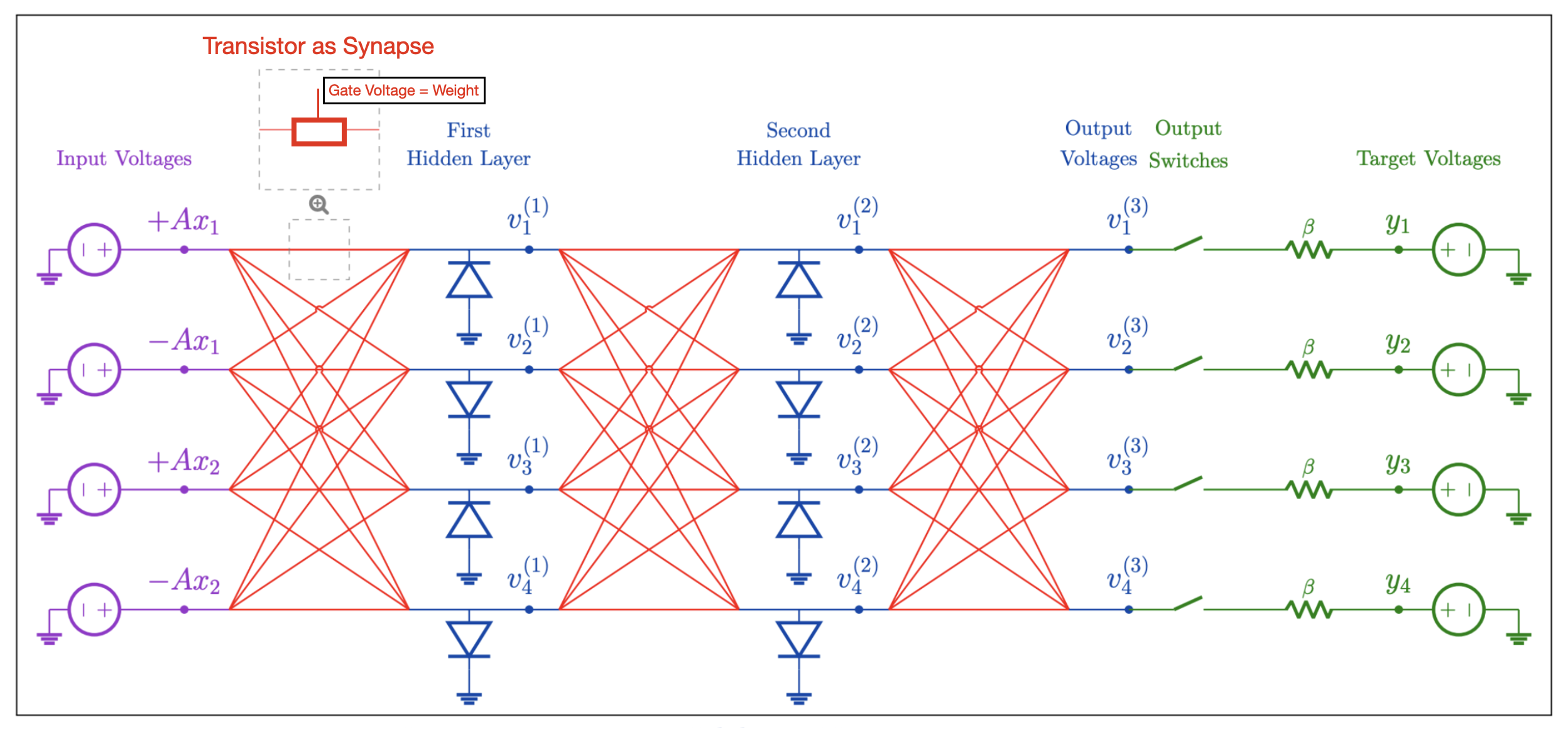

An Analog Circuit Training A Multi-Layer Perceptron

I designed and performed a transient SPICE simulation of a circuit capable of training a multi-layer perceptron fully in analog, using the Skywater 130nm PDK. It uses crossbar arrays built out of modified 2T1C dram cells to perform (nonlinear) matmuls and opamps as activation functions. Exact gradient updates over multiple layers (taking into account device non-idealities) are computed and applied fully in analog, using the equilibrium propagation algorithm.